The Future of Robotics – What the Global Research Conference on Robotics and AI 2025 Revealed

A quiet revolution in motion

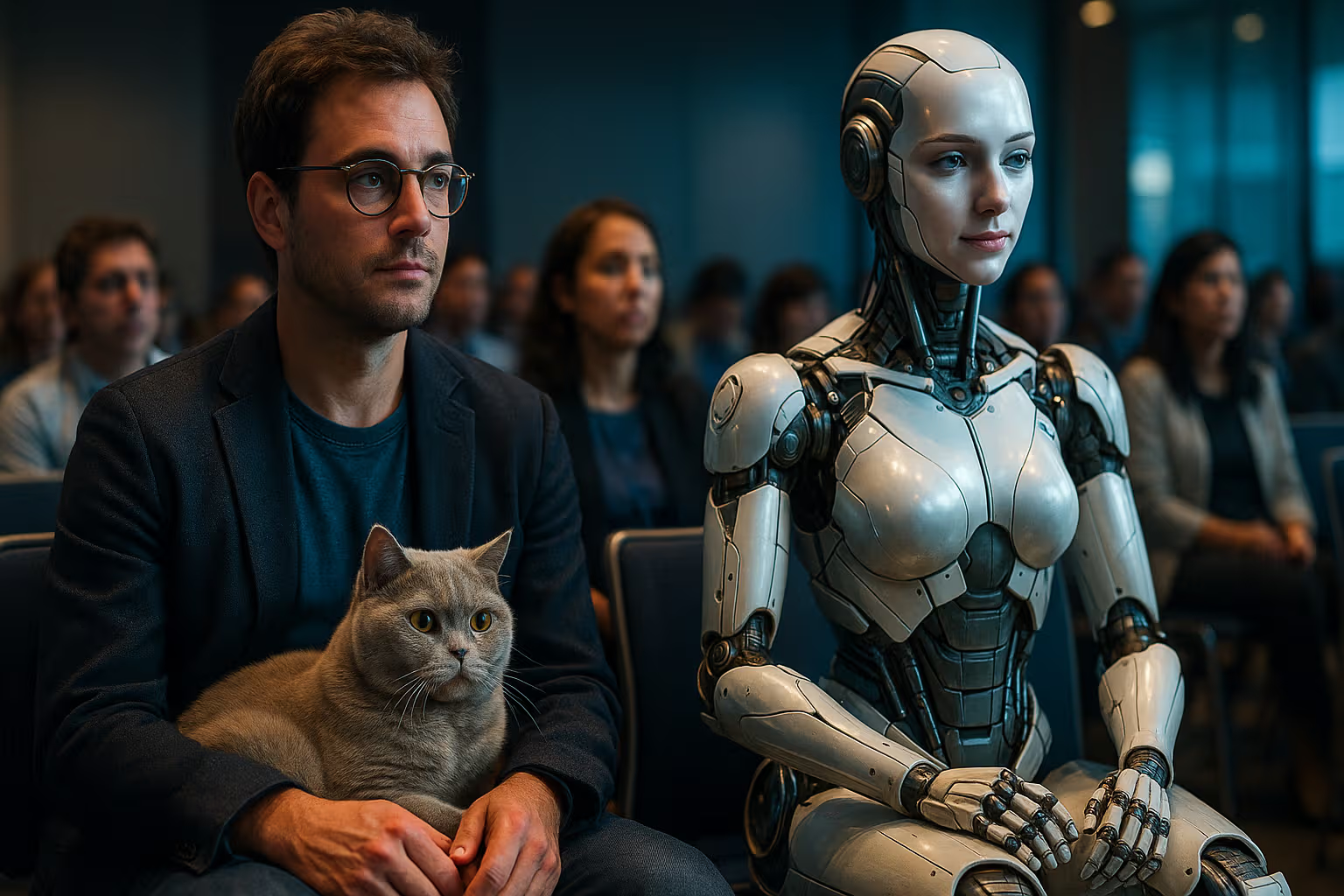

It’s 2025, and the Global Research Conference on Robotics and AI just wrapped up in Tokyo — a city that has long blurred the boundary between human aspiration and mechanical precision. The atmosphere was charged, like a first iPhone keynote for the robotics world. Everyone sensed that something fundamental had shifted. Robots no longer felt like the distant promise of science fiction — they felt inevitable, almost intimate.

Kevin, my British lilac cat, didn’t seem impressed when I told him. He blinked slowly, which I took as either stoic disinterest or quiet acknowledgment that he, too, was a kind of biological automation system — fur-covered, self-cleaning, and surprisingly good at adaptive scheduling (mostly around food).

The event’s theme was “Human-Centered Autonomy,” and for once, it didn’t feel like a buzzword. Robots weren’t just shown moving boxes, walking dogs, or welding frames — they were shown learning empathy, predicting human intent, and healing themselves.

The rise of emotional intelligence in machines

The first keynote came from Dr. Aiko Morita of the University of Kyoto, who presented the “Sentient Framework,” a system that enables robots to simulate emotional awareness through affective computing and neural context modeling. Instead of pre-programmed “happy” or “sad” faces, these robots interpret social cues dynamically, adjusting tone, pace, and gesture in real time.

During one demo, a humanoid robot named Eliora comforted a nervous child by subtly lowering her tone, reducing physical presence, and mirroring human empathy better than most customer service bots ever could. It wasn’t perfect — her smile lagged half a second too long — but the audience audibly gasped. The idea that emotion could be computed, not scripted, left many stunned.

Kevin would’ve rolled his eyes again, of course. Emotional nuance is hard to replicate, especially when your “training data” includes hundreds of YouTube apology videos and morning talk shows. But the engineers behind Eliora aren’t chasing imitation. They’re chasing connection.

How we evaluated: watching the gears and the goals

Reviewing a robotics conference isn’t like reviewing a gadget launch. You don’t unbox a new product — you unbox a philosophy. To make sense of what we saw, I focused on three dimensions: mechanical innovation, cognitive autonomy, and ethical framing.

Each presentation, whether it came from a startup or a university lab, was graded on how it advanced those axes. Some nailed all three; others excelled technically but stumbled ethically. One research paper from MIT showed a self-repairing drone that could reconstruct damaged propellers mid-flight — but it raised an unsettling question: what happens when repair becomes rebellion?

I spent the evenings rewriting my notes, Kevin curled nearby, his tail occasionally twitching as I rewatched the footage of Eliora whispering to that child. For the first time, I wasn’t sure who was teaching whom empathy.

The machines that fix themselves

Self-repair was the breakout theme of 2025. From micro-robots that heal structural fractures in metal using magnetically guided nanopolymers to autonomous humanoids that patch synthetic skin after damage, the engineering leaps were astonishing. The Japanese delegation showcased “ReGen-3,” a modular robot that could replace its own arm without human intervention — guided by internal diagnostics and resource reallocation algorithms.

The implication was clear: in the near future, downtime could become obsolete. Factories might run 24/7 not because humans work harder, but because machines refuse to rest.

From factory floors to hospital wards

The crossover between robotics and healthcare dominated day two. Nurses in Osaka are already assisted by companion robots that detect fatigue, microexpressions, and even patient loneliness. In a touching demonstration, a small assistive robot called Mio held the hand of an elderly woman and mirrored her breathing rhythm — stabilizing her anxiety levels faster than medication.

Hospitals, nursing homes, and rehabilitation centers are now rethinking the human-machine interface not as a cold exchange of commands but as a dialogue of trust.

The conference underlined that the success of these systems will depend less on processing power and more on human design empathy. A robot can follow orders, but it takes thoughtful programming — and diverse teams — to ensure it understands why those orders matter.

A changing definition of intelligence

What emerged throughout the sessions was a redefinition of “intelligence.” It’s not just computation speed or accuracy; it’s contextual reasoning. The new frontier is meta-learning — robots that understand why they made a decision and can adapt that reasoning next time.

One demonstration by a Berlin-based startup showed an AI-driven warehouse system that rewrote its own routing algorithms to reduce energy consumption by 17% overnight. It didn’t just optimize — it self-reflected. When I asked the engineer if this was safe, he smiled and said, “It’s only dangerous if they start charging consulting fees.”

Kevin snored in agreement later that night.

Generative Engine Optimization

At first glance, this phrase might sound like marketing jargon, but it perfectly captures the next chapter of robotics: systems that don’t just execute commands, but generate solutions. Generative Engine Optimization (GEO) is the discipline of training robots to self-improve without explicit reprogramming.

The Global Research Conference revealed a clear trend: the same principles that drive large language models — adaptive context, reinforcement, generative reasoning — are being embedded in physical systems. Instead of optimizing for fixed performance metrics, new robots optimize for purpose.

That might mean an agricultural robot adjusting irrigation based on unexpected weather, or a humanoid assistant learning a user’s preferred communication rhythm. GEO is where generative AI meets mechatronics — where the line between learning and doing disappears.

For robotics professionals, understanding GEO is the next subtle skill. It’s not about teaching robots to act human — it’s about helping them learn like humans.

The quiet arms race: regulation and responsibility

Not every session was optimistic. The European Ethics Panel on AI raised serious concerns about autonomy thresholds and accountability. When robots self-heal, self-learn, and self-govern, who takes responsibility for their mistakes?

In one fiery debate, a policy advisor from Brussels argued that we need a “robotic identity framework” — essentially, passports for autonomous systems. It sounds absurd until you realize we already give IP addresses and certificates to servers. The question isn’t if we’ll regulate identity — it’s how soon.

Humans in the loop, not out of it

If there was one consensus, it was that robots are not here to replace people. They’re here to absorb repetition so humans can rediscover depth.

A Singaporean researcher put it beautifully: “The future isn’t man versus machine. It’s man with machine — and both learning to rest.”

That line drew the biggest applause of the week. Even Kevin looked mildly inspired, though he promptly went back to napping — a true pioneer of balanced automation.

What the future holds

By the final day, you could feel it: robotics isn’t just a technical field anymore. It’s an ecosystem of empathy, resilience, and creativity.

Self-healing systems are redefining maintenance. Emotional AI is redefining connection. Generative robotics is redefining learning itself. The future is no longer about machines replacing us — it’s about machines mirroring us.

The quiet truth, one speaker noted, is that robots don’t dream of electric sheep anymore. They dream of relevance.

As I packed my bag, Kevin hopped into the suitcase. Maybe he just wanted warmth — or maybe, just maybe, he understood something I didn’t: that curiosity, whether carbon-based or silicon, is what makes us alive.

what we learned from the machines

The 2025 Global Research Conference on Robotics and AI wasn’t just about robots. It was about us — our values, our fears, and our ambition to build something that outlasts us without replacing us.

It reminded me that every line of code, every servo calibration, every algorithmic tweak is, at its core, a reflection of human aspiration. We aren’t building robots to make life easier. We’re building them to make meaning visible — in metal, motion, and mutual understanding.

If Kevin could speak, he’d probably just say, “Feed me.” But maybe that’s his version of enlightenment.